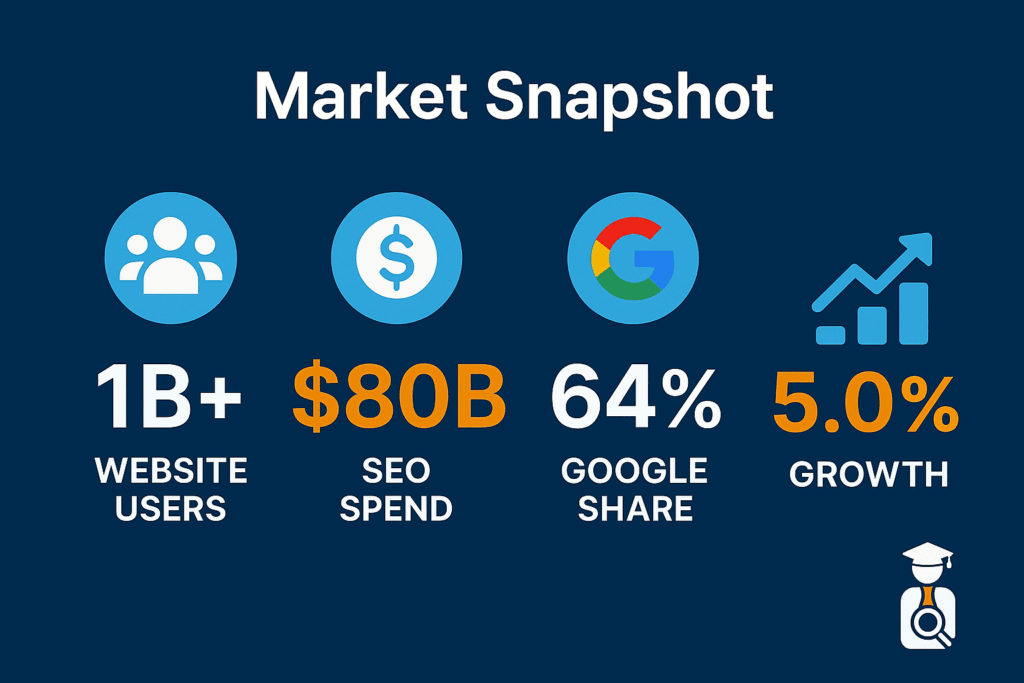

In today’s digital landscape, search has fundamentally transformed. The rise of “zero-click” behaviors means users increasingly get their answers directly within search results—without ever visiting a website. This seismic shift demands a complete rethinking of SEO strategy for 2025 and beyond.

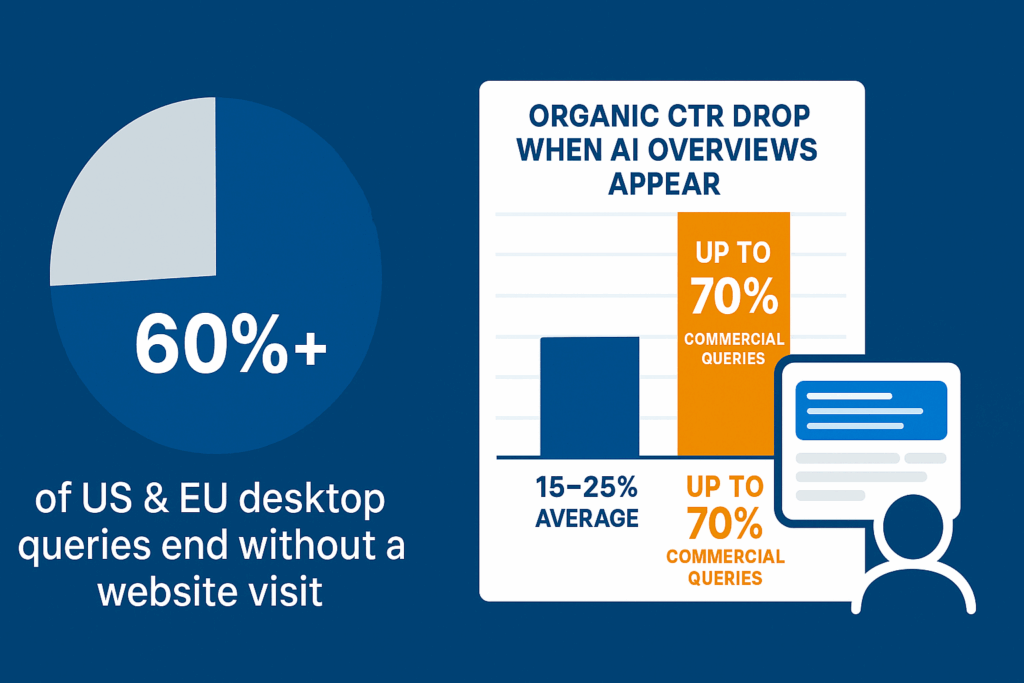

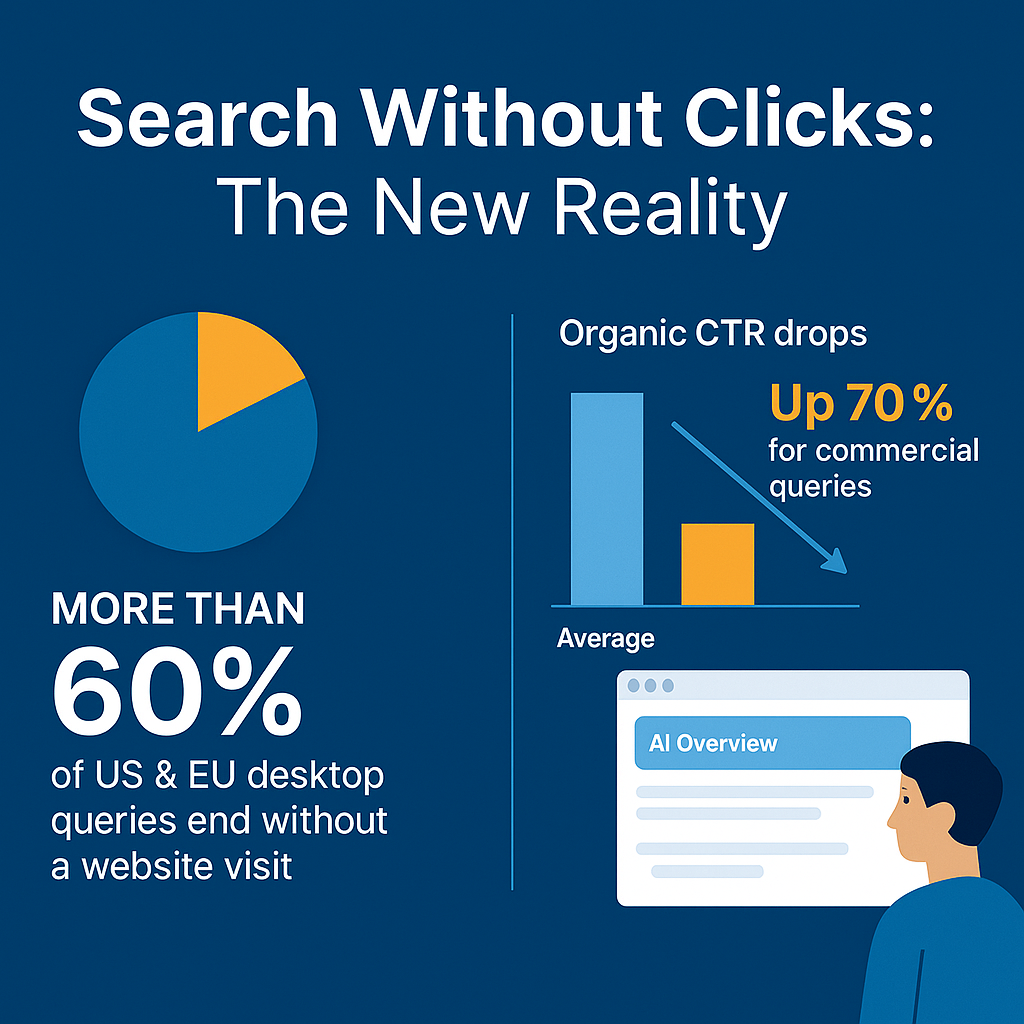

Recent data from SparkToro’s 2024 click-stream panel reveals only 374 of every 1,000 EU Google searches lead to an open-web click. In the US, the numbers are similarly concerning. Seer Interactive has documented organic CTR drops of 15-25% when AI Overviews appear—with some commercial queries seeing declines up to a staggering 70%.

This article explores how forward-thinking marketers are adapting through AI-Visibility (AIV) strategies and autonomous AI SEO agents. We’ll examine the architecture behind these systems, practical implementation steps, and real-world case studies showing measurable results. Whether you’re a small business owner or enterprise SEO director, you’ll discover actionable approaches to maintain visibility in an increasingly “answer-first, clicks later” search ecosystem.

Search Without Clicks: The New Reality

Zero-click search statistics

The data paints a clear picture: more than 60% of US and EU desktop queries now end without a website visit. This isn’t just a minor trend—it represents a fundamental shift in how people interact with search engines. AI-generated overviews and instant answers are increasingly satisfying user queries right on the results page.

According to SparkToro’s latest research, the majority of searches now resolve without users clicking through to any website. This pattern is especially pronounced for informational queries and simple factual questions, but is increasingly affecting commercial searches as well.

For brands focusing on keyword research and optimization, this evolution requires a strategic pivot. While traditional traffic metrics remain important, new metrics like “answer-share” (how often your brand appears as the cited source in AI-generated answers) are becoming equally critical performance indicators.

Organic-CTR drop case studies

The impact of zero-click search on organic click-through rates is substantial and well-documented. Seer Interactive’s regression analysis shows that when Google displays AI Overviews, websites experience CTR declines ranging from 15-25% on average—with some commercial queries seeing drops up to 70%.

These findings align with what many SEO professionals are reporting across industries. Product comparison queries, pricing questions, and simple how-to searches are particularly vulnerable, as AI-generated answers increasingly satisfy user intent directly in search results.

This shift means brands must rethink their approach to technical SEO and content strategy. Organizations that adapt quickly will find opportunities to be featured as authoritative sources within AI answers, maintaining brand visibility even when direct clicks decline.

Why brands must pivot now

The momentum behind this transformation is undeniable. Alphabet’s Q1-2025 earnings reveal that Google AI Overviews now reach approximately 1.5 billion monthly users worldwide. This massive adoption signals Google’s commitment to this format, making it clear that zero-click search is the new baseline, not a passing trend.

Furthermore, Google’s own data suggests that AI Overviews actually increase total search usage—indicating they’ll double down on this approach. For brands, this creates an urgent imperative: develop strategies to maintain visibility within AI-generated answers or risk becoming increasingly invisible to potential customers.

The organizations thriving in this environment have already pivoted from traditional ranking-focused SEO to comprehensive AI-Visibility strategies. Those waiting for a return to the “old normal” of search are likely to find themselves at a significant competitive disadvantage as this transformation accelerates.

Why AI-Visibility (AIV) Beats Classic Rankings

SEO vs AEO vs AIV

The evolution from traditional SEO to AI-Visibility represents a fundamental shift in how brands approach search presence:

SEO (Search Engine Optimization) focuses on ranking web pages in search results to drive organic traffic. The primary goal is appearing at the top of SERPs for targeted keywords.

AEO (Answer Engine Optimization) emerged as featured snippets and voice search gained prominence. It prioritizes structuring content to be selected as direct answers to specific questions.

AIV (AI-Visibility) represents the newest paradigm, where success is measured by your brand’s presence within AI-generated responses across multiple platforms. Rather than just tracking rankings or traffic, AIV monitors how frequently your content gets cited, quoted, or referenced by AI systems.

This progression requires expanding your technical SEO foundation to include entity recognition, structured data implementation, and content that aligns with how AI systems interpret information.

Traffic-loss scenarios in Overviews & Perplexity

The impact of AI overviews on traffic varies by query type, but certain scenarios consistently result in significant traffic reduction:

- Product comparisons: When AI tools present comprehensive price and feature grids directly in search results, users often get the information they need without clicking through to a website.

- Simple informational queries: Questions with straightforward factual answers can be fully satisfied by AI overviews, eliminating the need to visit source websites.

- Local business information: When business hours, locations, and phone numbers appear directly in search results, users frequently get what they need without visiting the business website.

According to Seer Interactive’s research, these scenarios can result in CTR reductions of 15-70% compared to traditional search results, depending on the query type and industry. This underscores why relying solely on traffic as a success metric is increasingly problematic.

New KPIs to watch

As AI-generated answers become more prevalent, new key performance indicators (KPIs) are emerging to measure digital marketing effectiveness:

Answer-share: The percentage of relevant queries where your brand or content appears as a source in AI-generated answers. This can be tracked using tools like Profound’s “Conversation Explorer,” which helps brands understand how frequently they’re cited in AI responses.

Entity-coverage: How comprehensively AI systems represent your brand’s products, services, and key topics. This measures whether AI tools have accurate and complete information about your offerings.

Citation-rate: The frequency with which AI systems specifically name or attribute information to your brand when providing answers related to your industry or niche.

These metrics provide a more holistic view of brand visibility in an AI-first search landscape. While traditional traffic metrics remain important, these new KPIs help quantify your presence in zero-click environments where users get answers without visiting websites.

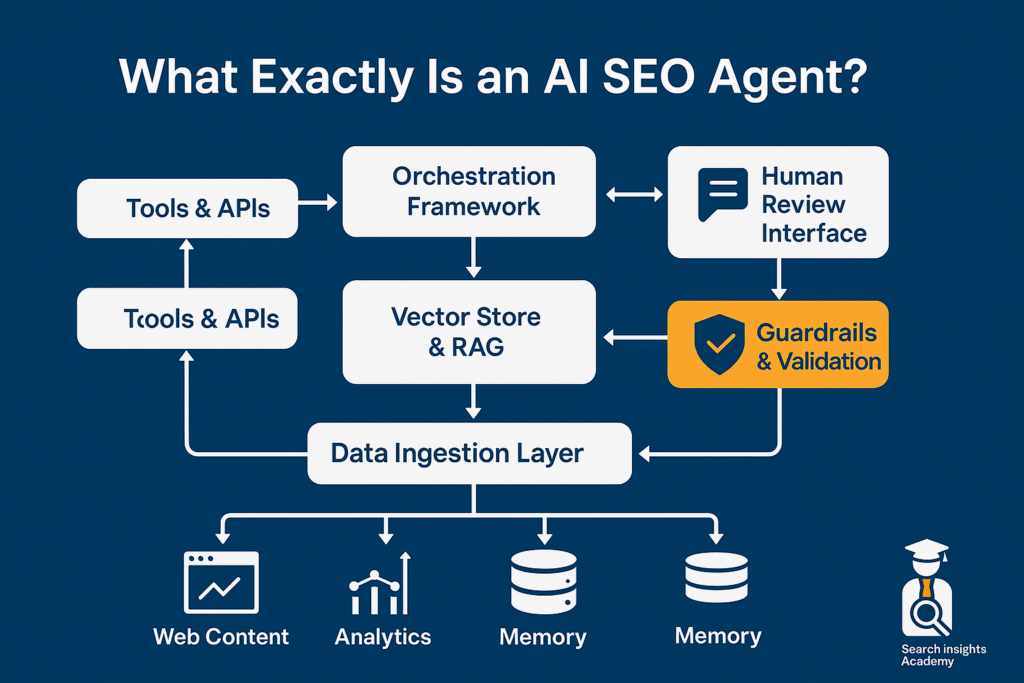

What Exactly Is an AI SEO Agent?

Conversational plug-ins vs autonomous task agents

There are two distinct approaches to integrating AI into SEO workflows:

Conversational plug-ins extend existing AI systems (like chatbots) to handle specific SEO-related queries or tasks. They’re reactive, responding to user requests rather than proactively identifying opportunities.

Autonomous task agents represent a more advanced approach. These systems can strategize, execute, and learn from SEO activities with minimal human intervention. They continuously monitor performance, identify optimization opportunities, and implement changes according to predetermined parameters.

The key difference is autonomy—while plug-ins require human direction for each task, autonomous agents can identify issues, propose solutions, and (when properly configured) implement changes independently. This capability is crucial for keeping pace with the rapidly evolving AI search landscape.

Core components – memory, tools, guardrails

A fully functional AI SEO agent integrates three essential components:

Memory systems store and retrieve relevant information that informs the agent’s decisions. Options include:

- FAISS (Facebook AI Similarity Search): An open-source library for efficient similarity search

- pgvector: A PostgreSQL extension that enables vector similarity queries

- Supabase Vector: A cloud solution that provides automatic embeddings and simplified vector storage

Tools and APIs connect the agent to data sources and execution platforms, such as:

- Analytics tools (GA4, Search Console)

- Rank tracking platforms

- Content management systems

- Structured data validators

- Performance monitoring tools

Guardrails prevent unwanted outcomes by establishing boundaries and validation processes:

- JSON schema validation via tools like Guardrails AI

- Brand voice and style verification

- Fact-checking mechanisms

- Approval workflows for significant changes

Together, these components create a system capable of understanding your content, identifying optimization opportunities, and implementing improvements while maintaining brand standards and factual accuracy.

Agent lifecycle & feedback loop

An AI SEO agent operates in a continuous improvement cycle:

- Ingest: Collect data from your website, analytics platforms, and external sources

- Vectorize: Transform content into numerical representations for efficient retrieval

- Propose: Generate optimization recommendations based on current data and goals

- Human review: Validate and refine agent suggestions

- Publish: Implement approved changes

- Measure: Track performance using both traditional metrics and AI-specific KPIs

- Iterate: Feed results back into the system to improve future recommendations

This feedback loop enables the agent to continuously refine its approach based on real-world results. As patterns emerge in what works and what doesn’t, the agent becomes increasingly effective at identifying high-impact optimization opportunities.

Memory options

The memory system is crucial for an AI SEO agent’s performance. Several options have emerged as leaders in this space:

FAISS (Facebook AI Similarity Search) offers high-performance similarity search for dense vectors. It’s ideal for large-scale applications but requires more technical expertise to implement.

pgvector extends PostgreSQL databases with vector similarity capabilities, making it accessible for organizations already using PostgreSQL infrastructure.

Supabase Vector provides automatic embeddings and simplified integration, reducing implementation complexity. Their newest feature automatically creates and manages embeddings, significantly reducing the technical barrier to entry.

For optimal retrieval performance, research suggests using dynamic chunk sizes between 300-500 tokens, rather than fixed-size chunks. This approach provides sufficient context while maintaining retrieval precision.

CrewAI’s new external-memory module offers a streamlined way to integrate pgvector with TypeScript agents, making implementation more accessible for development teams familiar with TypeScript.

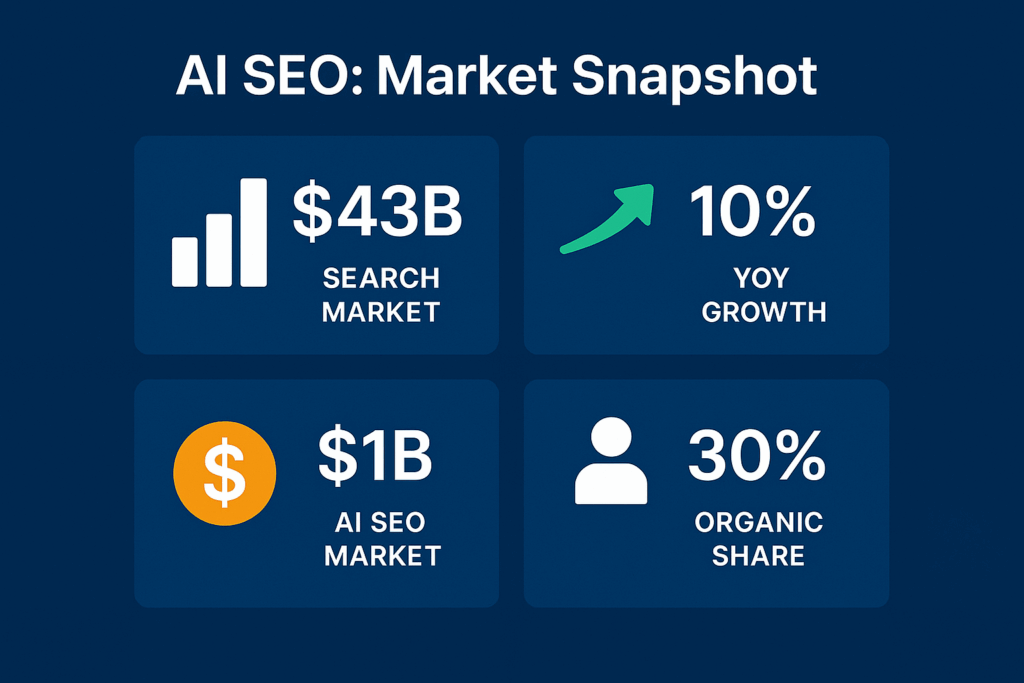

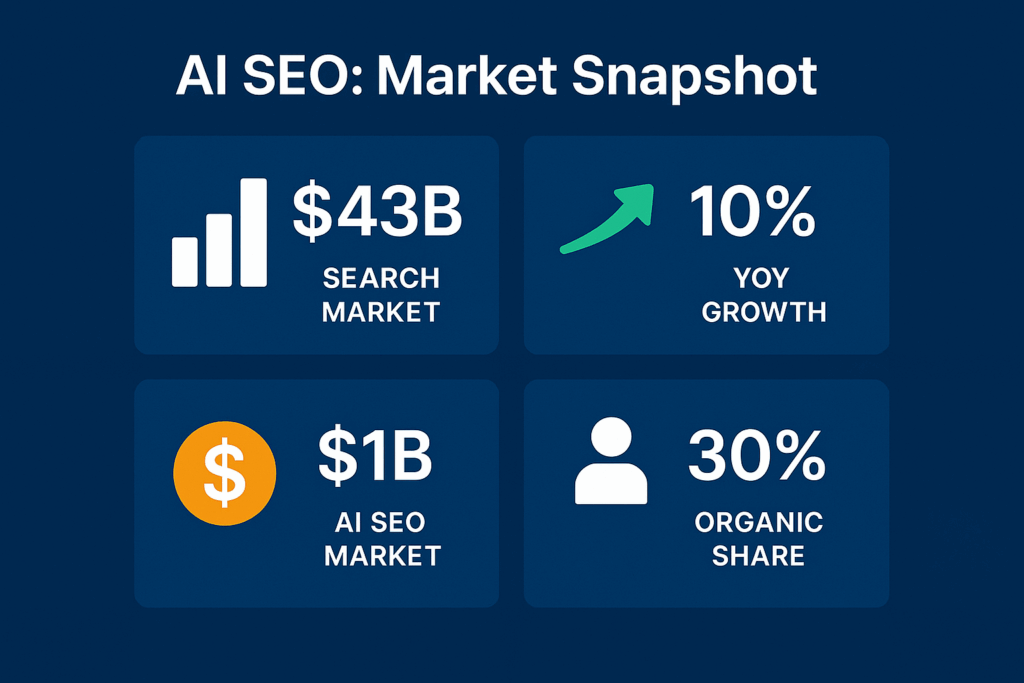

April 2025 Market Snapshot

Google AI Overviews global reach

Google’s AI Overviews have expanded dramatically, now reaching approximately 1.5 billion monthly users worldwide according to Alphabet’s latest quarterly earnings. This significant scale underscores Google’s commitment to prioritizing direct answers over traditional web results.

The format continues to evolve, with recent updates focusing on sourcing information from a more diverse range of websites while still prioritizing authoritative content. These overviews now appear for approximately 40% of all search queries, with particularly high coverage for informational and commercial research queries.

For SEO professionals, this widespread adoption reinforces the need to optimize content for inclusion in these AI-generated summaries. Visibility within Overviews is increasingly becoming as important as traditional ranking positions.

Perplexity “Sponsored Question” ads

Perplexity has launched “Sponsored Question” ads, creating a new monetization channel for their AI search experience. This format allows brands to sponsor specific questions relevant to their products or services, ensuring their information appears prominently in responses.

According to TechCrunch reporting, this represents Perplexity’s first major advertising product. The company has positioned these sponsored placements as a way to provide value to users while creating revenue opportunities for the platform.

Perplexity’s own blog explains that they’re carefully testing this format to balance user experience with monetization needs. Early results suggest users find the sponsored content valuable when it’s relevant and transparently labeled.

Brandtech / Profound “Conversation Explorer”

The Financial Times recently reported on Brandtech and Profound’s “Conversation Explorer” tool, which helps brands track their presence in AI conversations. This analytics platform monitors how frequently brands appear in AI-generated answers across multiple platforms.

Early adopters like Ramp and Indeed have leveraged these insights to optimize their content for AI visibility. The tool provides granular metrics on answer-share, entity coverage, and citation patterns, enabling data-driven optimization strategies.

For marketers, tools like Conversation Explorer represent an emerging category of analytics focused specifically on measuring AI visibility rather than traditional web traffic or rankings.

Writesonic SEO Agent beta

Writesonic has released a beta version of their SEO Agent, providing marketers with an accessible entry point to AI-powered optimization. This tool automates common SEO tasks like meta tag generation, content recommendations, and keyword opportunity identification.

Unlike fully autonomous agents, Writesonic’s solution focuses on augmenting human SEO work rather than replacing it. The system provides recommendations that marketers can review and implement, streamlining workflows without removing human oversight.

This approach represents a middle ground between completely manual SEO and fully autonomous agents, making advanced AI capabilities accessible to smaller marketing teams without specialized AI expertise.

Google’s DMA “blue-links only” experiment

In response to the Digital Markets Act (DMA) in Europe, Google has been testing a “blue-links only” search experience that removes many rich results and AI-generated content. According to Search Engine Land, this experiment demonstrates how regulatory requirements might reshape search results.

This development highlights the potential for regional variations in search experiences based on different regulatory frameworks. For global brands, this means potentially maintaining different optimization strategies for different markets based on how AI content is regulated and displayed.

The experiment also provides a rare glimpse of what search might look like without AI enhancements—reinforcing just how significantly these features have changed user expectations around search results.

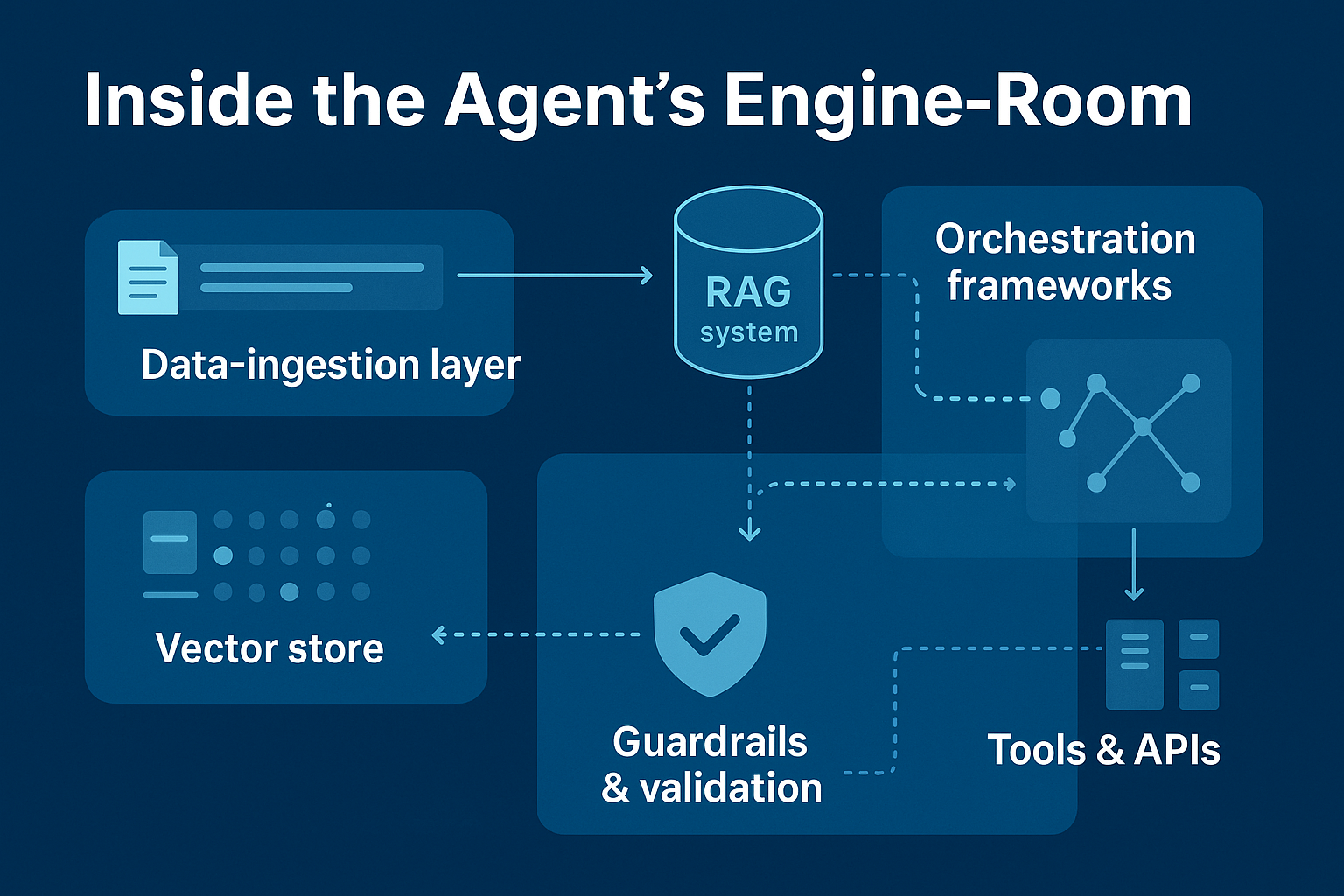

Inside the Agent’s Engine-Room

Data-ingestion layer

The foundation of any effective AI SEO agent is its data ingestion system. This component collects, processes, and organizes information from multiple sources to fuel the agent’s decision-making.

Modern ingestion systems often leverage workflow automation tools like n8n, which recently added a dedicated AI-Agent node in version 1.89. This addition simplifies building low-code pipelines that connect data sources to AI processing components.

Effective data ingestion requires addressing several key challenges:

- Data freshness: Ensuring the agent works with current information

- Format standardization: Converting diverse content types into consistent formats

- Quality filtering: Removing low-quality or irrelevant information

- Metadata enrichment: Adding context that helps the agent understand content relevance

By solving these challenges, the data ingestion layer provides the raw material necessary for effective SEO optimization in an AI-first environment.

Vector store & Retrieval-Augmented Generation

Once data is ingested, it’s transformed into vector representations and stored in specialized databases optimized for similarity search. This enables Retrieval-Augmented Generation (RAG), where the agent can quickly identify and access relevant information when making decisions.

Supabase’s automatic embeddings feature has significantly reduced implementation complexity by handling vector creation and management behind the scenes. This makes RAG technology more accessible to teams without specialized machine learning expertise.

For optimal retrieval performance, testing indicates that dynamic chunk sizes between 300-500 tokens generally yield the best accuracy. This approach provides sufficient context for the AI to understand content meaning while maintaining precise retrieval.

The vector store essentially functions as the agent’s long-term memory, allowing it to draw connections between related content and identify patterns that inform optimization strategies.

Orchestration frameworks

Orchestration frameworks coordinate the various components of an AI SEO agent, ensuring they work together effectively. Two notable options have emerged as leaders in this space:

CrewAI (TypeScript) recently added a memory module that integrates seamlessly with pgvector, making it easier to build agents with persistent knowledge. This framework excels at creating agents that can maintain context across multiple tasks and sessions.

AutoGen 0.4 has introduced multi-agent benchmarking and a graphical user interface, simplifying agent development and performance evaluation. These additions make it easier to test different agent configurations and identify the most effective approaches.

These frameworks handle critical orchestration tasks like:

- Sequencing operations in logical order

- Managing state across multiple processes

- Handling failures and retries

- Scaling resources based on workload

- Logging activities for analysis and compliance

By abstracting these complex coordination tasks, orchestration frameworks allow developers to focus on agent logic rather than infrastructure management.

Guardrails & validation

Guardrails ensure AI SEO agents operate within acceptable parameters, preventing potentially harmful actions or content. These safeguards are essential for maintaining brand integrity and ensuring regulatory compliance.

Guardrails AI provides JSON schema validation that can prevent hallucinations (AI-generated inaccuracies) before content is published. This approach defines explicit structures for agent outputs, flagging any content that doesn’t conform to expected patterns.

Effective guardrails typically include:

- Factual accuracy verification

- Brand voice and style checks

- Compliance with regulatory requirements

- Performance impact predictions

- Approval workflows for significant changes

These mechanisms ensure that automated optimizations enhance rather than damage your digital presence, providing confidence that autonomous agents won’t make catastrophic mistakes.

Deploy, test, iterate cycles

AI SEO agents operate in a continuous improvement loop of deployment, testing, and iteration. This cyclical process allows agents to learn from real-world results and progressively improve their effectiveness.

A typical cycle includes:

- Stage changes in a test environment before applying them to production

- Monitor performance using both traditional metrics and AI-specific KPIs

- Auto-revert changes that cause performance declines

- Document successes to inform future optimization strategies

- Refine agent parameters based on observed outcomes

This methodology applies scientific testing principles to SEO, creating a data-driven approach to optimization that continuously improves based on real-world results.

Retrieval optimisation

Effective retrieval is critical for an AI SEO agent’s performance. Internal testing confirms that dynamic chunk sizing between 300-500 tokens typically yields the best RAG accuracy, though optimal settings vary based on content type and application.

Beyond chunk sizing, several other retrieval optimization techniques can improve agent performance:

- Hybrid retrieval combining keyword and semantic search

- Re-ranking retrieved chunks based on relevance

- Query expansion to capture related concepts

- Contextual weighting to emphasize recent or important content

These techniques ensure the agent identifies the most relevant information when making decisions, improving the quality of its recommendations and actions.

Automation Toolkit Choices

Low-/no-code: n8n, Zapier Agents, Make

For organizations with limited development resources, low-code and no-code tools offer accessible entry points to AI SEO automation:

n8n recently added an AI-Agent node in version 1.89, enabling low-code pipelines that connect data sources to AI processing components. This visual workflow builder makes it relatively straightforward to create automated processes without extensive coding.

Zapier Agents (currently in beta) extends Zapier’s automation platform with AI capabilities. This allows marketers to create intelligent workflows that respond to changing conditions and make data-driven decisions.

Make (formerly Integromat) provides another visual automation platform with extensive integration options. Its flexible approach to workflow design makes it suitable for creating complex automation sequences.

These platforms are ideal for quick proof-of-concept implementations and scenarios where marketing teams need to operate with minimal developer involvement. They provide a practical starting point for organizations looking to test AI SEO automation before investing in more sophisticated solutions.

Code-first: CrewAI, Prefect 3 ControlFlow, Airflow, Dagster

For more advanced implementations requiring greater flexibility and control, several code-first frameworks stand out:

CrewAI provides a TypeScript framework specifically designed for creating AI agents. Its recent memory module addition simplifies creating agents with persistent knowledge, making it well-suited for SEO applications.

Prefect 3 ControlFlow offers Python-based workflow orchestration with comprehensive monitoring and failure handling. Its focus on reliability makes it appropriate for mission-critical SEO automation.

Airflow remains a popular choice for data pipeline orchestration, with extensive scheduling capabilities and a large ecosystem of integrations. Its maturity makes it a safe choice for enterprise deployments.

Dagster emphasizes data-aware orchestration, making it well-suited for SEO applications that involve complex data transformations and dependencies.

These frameworks offer greater customization and scaling capabilities compared to no-code solutions, making them appropriate for enterprise-scale implementations and scenarios requiring advanced functionality.

Selection criteria (scale, budget, skill set)

Choosing the right automation toolkit depends on several key factors:

Scale: Larger organizations with extensive content and complex SEO needs typically benefit from code-first solutions that offer greater customization and performance at scale. Smaller organizations may find no-code tools sufficient for their needs.

Budget: No-code platforms generally involve subscription costs but lower implementation expenses. Code-first solutions often have lower licensing costs but require more significant development investment.

Skill set: Organizations with strong development capabilities can leverage code-first solutions effectively. Teams with limited technical resources may achieve better results with no-code platforms despite their limitations.

Timeline: No-code tools enable faster initial implementation, while code-first solutions typically require more upfront development time but offer greater long-term flexibility.

By carefully evaluating these factors, organizations can select the approach that best aligns with their resources and objectives.

Five Deploy-Today Automations

Entity-markup injector (schema.org)

This automation identifies pages missing structured data markup and automatically adds appropriate schema.org annotations. By improving entity recognition, you boost your chances of appearing in AI-generated results.

The process typically includes:

- Scanning pages for existing structured data

- Identifying missing entity types based on content analysis

- Generating appropriate schema.org markup

- Injecting the markup into page templates

- Validating the implementation using Google’s Rich Results Test

This automation directly supports structured data implementation best practices, improving how search engines and AI systems understand your content.

Title/meta re-writer—A/Bs CTR drops

This automation continuously tests alternative title and meta description variations to identify those that generate the highest click-through rates. When AI Overviews appear and impact CTR, this system can quickly identify and implement more effective metadata.

The workflow typically includes:

- Monitoring CTR for key pages

- Generating alternative title and description variants

- Implementing A/B tests to measure performance

- Automatically selecting the highest-performing variants

- Monitoring for performance declines and reverting if necessary

By responding quickly to CTR changes caused by AI Overviews, this automation helps maintain traffic levels even as search results evolve.

Answer-snippet grader against AI-Overview copies

This automation evaluates how your content appears in AI-generated snippets and identifies opportunities for improvement. It compares the AI-generated content with your original material to ensure accuracy and optimal presentation.

The process typically includes:

- Monitoring AI Overviews that reference your content

- Comparing generated snippets with your source material

- Scoring snippets based on accuracy, completeness, and alignment with your messaging

- Identifying opportunities to improve content to better inform AI systems

- Tracking changes in how your content is represented over time

This automation helps ensure your content is accurately and effectively represented in AI-generated results, maintaining brand integrity in zero-click environments.

Local-listing freshness bot (weekly GBP sync)

For businesses with physical locations, this automation ensures Google Business Profile and other local listings remain current and comprehensive. It synchronizes information across platforms and identifies opportunities to enhance local visibility.

The workflow typically includes:

- Weekly audits of local listing information

- Updating hours, special events, and seasonal information

- Synchronizing data across multiple listing platforms

- Monitoring review activity and flagging items requiring response

- Tracking local search visibility and identifying optimization opportunities

This automation directly supports local SEO best practices, helping businesses maintain visibility in location-based searches.

Continuous AIV dashboard—feeds Profound Explorer

This automation creates a real-time dashboard tracking AI Visibility metrics across multiple platforms. By centralizing this data, it enables strategic decision-making based on comprehensive visibility insights.

The dashboard typically includes:

- Answer-share metrics across major AI platforms

- Entity coverage analysis showing how completely your brand is represented

- Citation rate tracking showing how often your brand is specifically mentioned

- Comparative analysis against competitors

- Trend analysis showing changes over time

By monitoring these metrics continuously, organizations can quickly identify opportunities to improve AI visibility and respond to competitive challenges.

Mini Case Studies

Ramp grew answer-share from 2%→7% in 8 weeks via Profound Explorer

Financial technology company Ramp successfully increased their presence in AI-generated answers by implementing a comprehensive AI visibility strategy. Using Profound’s Conversation Explorer to track performance, they identified specific questions where they had opportunities to improve their answer-share.

According to the Financial Times, Ramp’s approach focused on enhancing content specifically designed to address common financial queries. By optimizing content structure and implementing comprehensive schema markup, they increased their answer-share from 2% to 7% within eight weeks.

Key to their success was focusing on specific high-value queries rather than attempting to optimize for all possible questions. This targeted approach delivered substantial improvements in a relatively short timeframe.

Indeed lifted snippet CTR on “jobs in Sydney” by 18%

Employment platform Indeed faced declining click-through rates when AI Overviews began appearing for job-related queries. Rather than accepting this traffic loss, they implemented an AI SEO agent focused on optimizing snippet performance.

By analyzing how job listings appeared in AI-generated results, Indeed identified opportunities to make their content more compelling even when condensed into snippets. Their optimization efforts focused on:

- More engaging job title formats

- Clearer salary and benefit information

- Distinctive company value propositions

- Location-specific advantages

These changes led to an 18% increase in CTR for “jobs in Sydney” queries, demonstrating that well-optimized content can drive clicks even when competing with AI-generated overviews.

Shopify merchants trialling Perplexity ads saw 12% lead-gen lift

According to TechCrunch reporting, Shopify merchants participating in Perplexity’s “Sponsored Question” ad beta program experienced significant improvements in lead generation. By positioning their solutions as direct answers to relevant questions, these merchants created a new acquisition channel complementing their existing marketing efforts.

The sponsored content appeared within Perplexity’s conversational search results, providing value to users while simultaneously showcasing merchant offerings. This contextual placement resulted in a 12% increase in qualified leads compared to traditional advertising channels.

This case study demonstrates that embracing new AI-focused advertising formats can deliver meaningful business results, particularly when the sponsored content provides genuine value to users seeking specific information.

Build-Your-Own Agent in 11 Steps

1. Ingest → 2. Vectorise

The first phase involves collecting and preparing your content for AI processing:

Step 1: Ingest data

- Gather website content, including pages, blog posts, and product information

- Collect analytics data showing current performance

- Import competitor information for comparative analysis

- Assemble relevant industry research and trend data

Step 2: Vectorise content

- Transform text into numerical vector representations

- Store vectors in your chosen database (FAISS, pgvector, or Supabase Vector)

- Create metadata indexes to facilitate efficient retrieval

- Implement dynamic chunk sizing (300-500 tokens) for optimal retrieval performance

These initial steps create the foundation for your AI SEO agent by establishing its knowledge base and enabling efficient information retrieval.

3. Define roles → 4. Prompt schema

Next, establish how your agent will operate and interact:

Step 3: Define roles

- Specify the agent’s responsibilities (e.g., content optimization, structured data implementation)

- Establish decision-making authority (which changes the agent can make autonomously)

- Define escalation paths for situations requiring human intervention

- Create role hierarchies for multi-agent systems

Step 4: Craft prompt schema

- Develop structured prompts that guide the agent’s responses

- Implement JSON schema validation to ensure output consistency

- Create templates for different types of optimization tasks

- Define guardrails that prevent unwanted content or actions

These steps establish the agent’s operating parameters, ensuring it functions as expected while maintaining appropriate safety mechanisms.

5. Run loop → 6. Human review → 7. Publish

With infrastructure in place, the agent can begin active optimization:

Step 5: Run first loop

- Initiate the agent with specific optimization objectives

- Monitor processing speed and resource utilization

- Capture detailed logs for later analysis

- Identify any initial issues requiring adjustment

Step 6: Human-in-the-loop review

- Review agent recommendations before implementation

- Provide feedback to improve future suggestions

- Approve, modify, or reject proposed changes

- Document decision rationale for training purposes

Step 7: Publish changes

- Implement approved optimizations

- Record before/after state for comparison

- Tag changes for performance tracking

- Ensure publishing systems maintain version history

This operational phase puts your agent to work while maintaining appropriate human oversight and accountability.

8. Measure AIV → 9. Rollback on dip → 10. Weekly iteration

Continuous measurement and refinement keep your agent effective:

Step 8: Measure AIV

- Track answer-share across AI platforms

- Monitor entity coverage and citation rates

- Compare performance with traditional SEO metrics

- Analyze changes in user behavior patterns

Step 9: Roll back on drops

- Establish performance thresholds that trigger automatic rollbacks

- Implement monitoring systems that detect unexpected declines

- Create automated reversion processes for critical issues

- Document trigger events for later analysis

Step 10: Weekly iteration

- Review performance data and identify improvement opportunities

- Update agent parameters based on observed outcomes

- Refine prompts and decision criteria

- Expand the agent’s capabilities based on successful patterns

This feedback loop ensures your agent continuously improves based on real-world performance data.

11. Retro-evaluate with AutoGen Bench

Regular evaluation maintains agent effectiveness over time:

Step 11: Retro-evaluation

- Use AutoGen Bench to measure agent performance against benchmarks

- Review Guardrails logs to identify potential issues

- Conduct comprehensive quarterly reviews of agent impact

- Compare actual outcomes with predicted performance

This evaluation process identifies long-term patterns and opportunities for strategic improvement, ensuring your agent remains effective as search ecosystems evolve.

Risks & Guardrails

Hallucinations – stop with Guardrails JSON schema

AI systems sometimes generate factually incorrect information, known as “hallucinations.” These errors can damage brand credibility if published without verification. Implementing JSON schema validation through tools like Guardrails AI provides an effective defense against this risk.

This approach:

- Defines explicit structures for agent outputs

- Validates generated content against these structures

- Flags content that doesn’t conform to expected patterns

- Prevents publication of potentially inaccurate information

By implementing these guardrails, organizations can confidently automate content optimization while maintaining factual accuracy and brand integrity.

Brand-tone drift – style-guide checkers

Autonomous content optimization can sometimes result in subtle shifts away from established brand voice and style. To prevent this drift, implement automated style-guide checkers that verify content against your established brand standards.

These systems:

- Compare generated content against brand style guidelines

- Flag deviations from approved terminology or phrasing

- Ensure consistent tone across all content

- Preserve brand identity even with automated content generation

Regular style checking maintains consistent brand presentation across all content, whether human or AI-generated.

SERP volatility – threshold-based rollback

Search results can change rapidly, sometimes causing unexpected performance declines following optimization. Implementing threshold-based rollback systems provides protection against these volatile conditions:

- Establish performance baselines for key metrics

- Define thresholds that trigger automatic intervention

- Implement monitoring systems that detect significant declines

- Create automated reversion processes that restore previous versions

This safety mechanism prevents temporary search volatility from causing lasting damage to your digital presence.

DMA compliance – keep two-year prompt/output logs

Regulatory requirements like the Digital Markets Act (DMA) in Europe create compliance obligations for organizations using AI systems. Maintaining comprehensive logs helps demonstrate compliance with these requirements:

- Record all prompts used by your AI SEO agent

- Store outputs generated by the system

- Maintain decision logs showing human review and approval

- Keep these records for at least two years (or as required by applicable regulations)

These logs provide an audit trail demonstrating responsible AI use and regulatory compliance, reducing legal and reputation risks.

Metrics & Dashboards

AIV %, answer-share trend, entity-coverage score

New metrics are emerging to measure visibility in AI-driven search environments:

AIV percentage measures the proportion of your total brand visibility that comes from AI-generated results rather than traditional web pages. Tracking this figure over time helps quantify the growing importance of AI visibility for your brand.

Answer-share trend tracks how frequently your content is used as a source for AI-generated answers to relevant queries. Increasing this metric indicates growing authority and relevance in your topic areas.

Entity-coverage score evaluates how comprehensively AI systems represent your brand entities (products, services, key topics). A higher score indicates that AI systems have more complete information about your offerings.

These metrics provide insight into your brand’s presence within AI-driven search ecosystems, complementing traditional SEO metrics.

ROI proxies, explainability log

Measuring the business impact of AI visibility requires new approaches to ROI calculation:

ROI proxy metrics help quantify the value of AI visibility when direct conversion tracking isn’t possible. These might include:

- Brand recall improvements

- Consideration rate changes

- Changes in branded search volume

- Shifts in audience perception

Explainability logs document which data points informed AI-generated content, creating transparency around how your brand is represented. These logs:

- Record the sources used to generate AI answers

- Document which content elements were selected

- Track how information was synthesized or summarized

- Provide an audit trail for compliance purposes

Together, these metrics and logs create accountability for AI SEO initiatives and help demonstrate business impact beyond traditional traffic metrics.

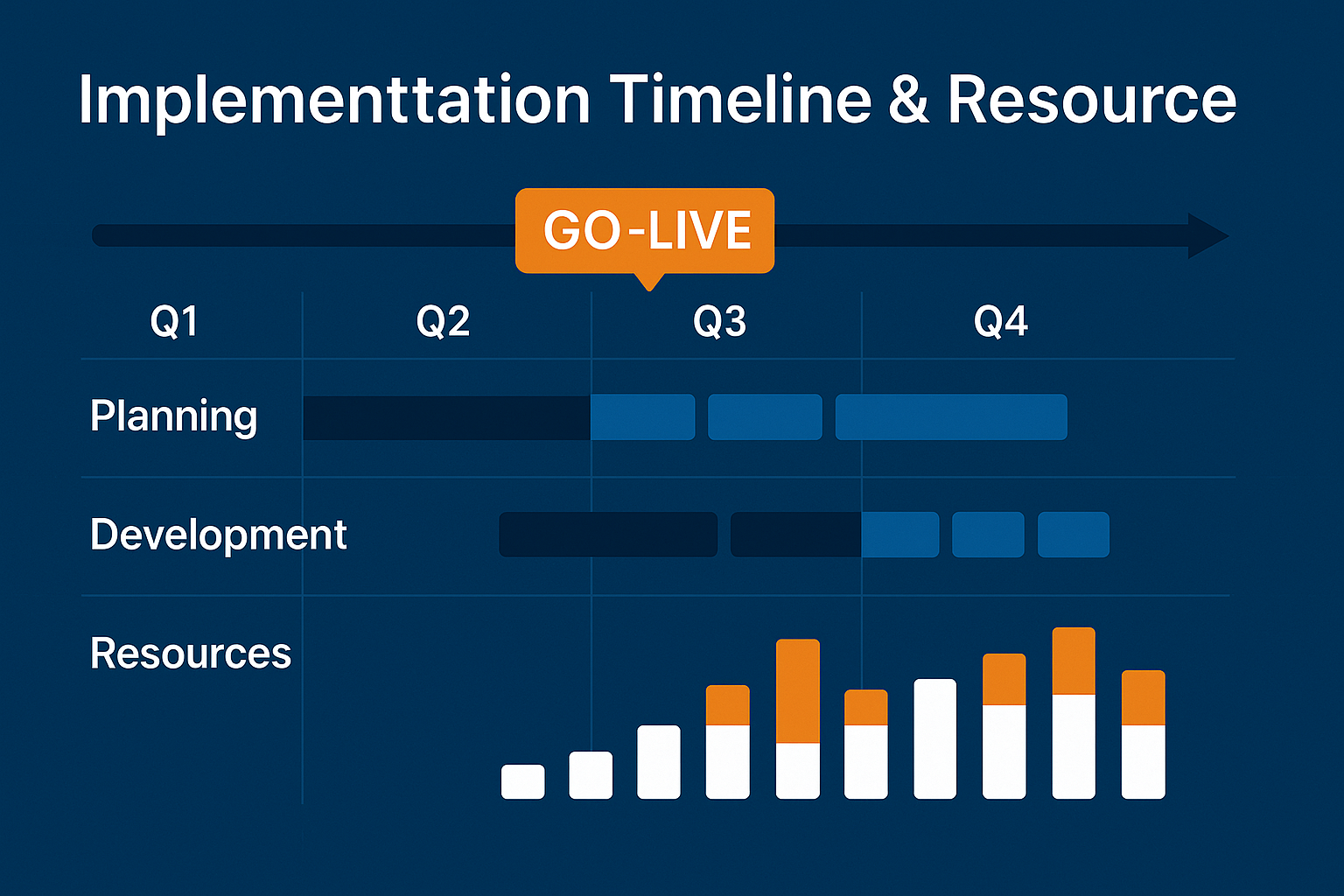

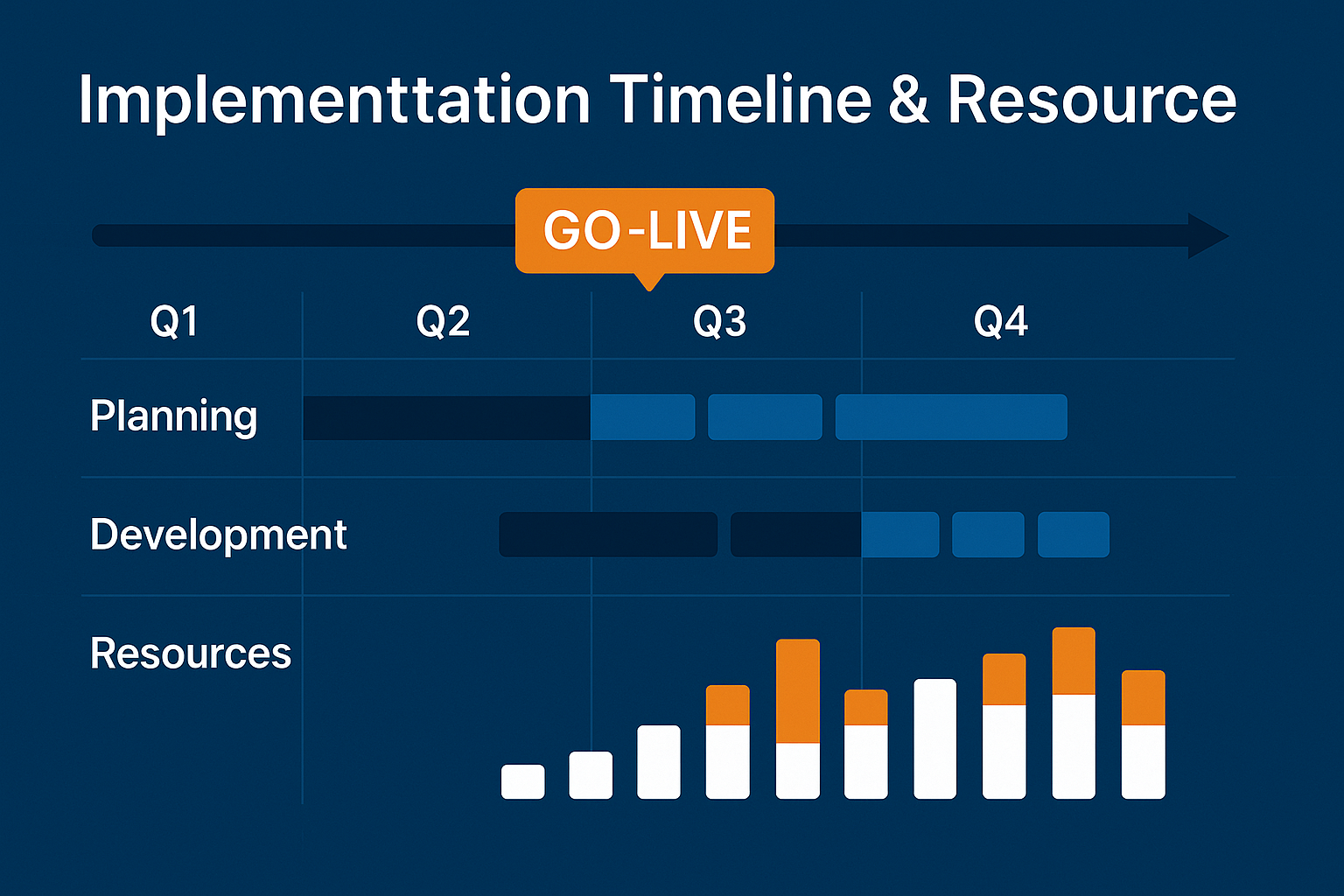

Timeline & Resource Estimate

Week 1: Data ingest & vector setup (25 hours)

The initial phase focuses on establishing the foundation for your AI SEO agent:

- Configure data collection from website, analytics, and external sources

- Set up vector database infrastructure

- Implement content chunking and vectorization

- Create initial retrieval testing and optimization

This phase requires approximately 25 hours of work, primarily from technical team members with data engineering expertise.

Week 2: Agent logic + guardrails (30 hours)

The second week focuses on developing the agent’s intelligence and safety mechanisms:

- Implement decision logic and optimization rules

- Set up JSON schema validation using Guardrails AI

- Create brand voice verification systems

- Develop approval workflows and human review interfaces

This phase requires approximately 30 hours, with contributions from both technical and content-focused team members.

Week 3: Stage → prod + KPI baseline (20 hours)

The final implementation phase transitions the agent from testing to production:

- Deploy the agent to staging environment for final testing

- Establish baseline KPI measurements

- Create monitoring dashboards

- Transition to production environment

- Implement ongoing performance tracking

This phase requires approximately 20 hours, with emphasis on testing, measurement, and operational integration.

Conclusion & Next Actions

The search landscape has fundamentally transformed. With over 60% of searches now ending without a website click, brands must adapt to maintain visibility in this “answer first, clicks later” world. Building autonomous AI SEO agents represents one of the most promising strategies for navigating this new reality.

These systems—powered by vector memory, orchestration frameworks like CrewAI or n8n, and robust guardrails—enable brands to monitor, adapt, and optimize at machine speed. By tracking new metrics like answer-share and entity coverage, organizations can measure their presence in AI-generated results and continuously improve their visibility.

Whether you choose a low-code approach using tools like Zapier Agents or a code-first implementation with Prefect or CrewAI, the key is to start building your AI visibility capabilities now. Begin with one of the five deploy-today automations, establish your measurement framework, and create iterative improvement cycles. The organizations that master AI visibility today will maintain their competitive edge even as zero-click behaviors continue to dominate the search landscape.

For more guidance on building your technical SEO foundation, explore our articles on site speed optimization, crawlability and indexability, and structured data implementation.